Every now and then an idea comes along that shatters your perception of what you think is possible. It's the kind of idea that makes you stop like a deer in the headlights, shelving all your other projects as you just ponder the applications of this one idea, the realisation of which would look like something straight out of a scifi film.

In fact, ideas like this are why I work in this space. I'm literally giddy with excitement as I write this because it's both insanely cool and yet entirely doable! Interest stoked? Good let's get to it. What on earth am I talking about?

The idea is this. Imagine having an entire workforce under your command, all of whom are AI agents. They never sleep, never tire, always improve. Their aim? Whatever you say it is. Impossible I hear you say? Not so. I reply. Here's why.

OpenAI (the makers of ChatGPT) provide an API for developers to make use of their AI models in ways not limited by the ChatGPT interface. Typically, people interact with these models by creating an application that sends a model some form of request and receives a response in answer to that request. A profound interchange, basic as it is.

The response from the API comes back as JSON, a data structure that can be manipulated in various ways to pretty much any end a developer can think of. They might convert the data into a table for ease of viewing. They might perform an action based on the data's contents. They might update part of the user interface to indicate that some threshold in the data has been passed. And on and on it goes.

These exchanges, sophisticated as they are, are still limited in that it's one client exchanging with one model. So apps built in this way, again sophisticated as they are, still follow a very basic premise.

Here's where it gets cool.

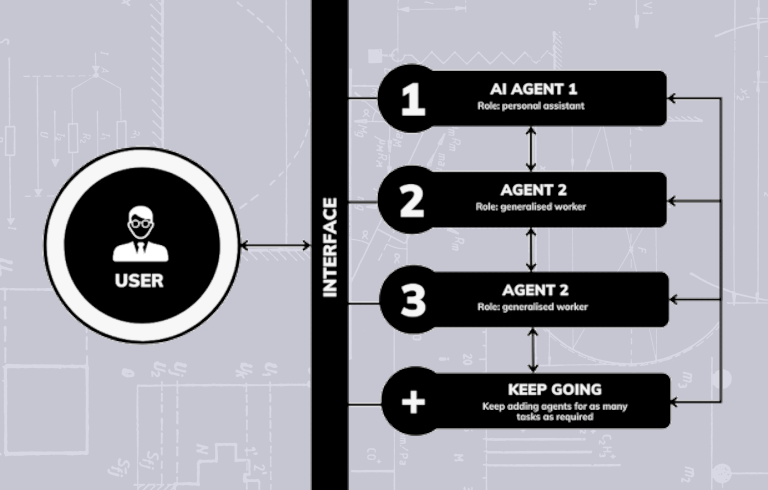

Let's say, instead of this basic back and forth, we consider the following. The API is already built such that it can be molded to various whims. We can essentially engineer our inputs to get very specific and specialised outputs. So let's say we create a number of prompts to mirror a number of roles within an organisation. Supplemented with custom code to do all manner of things, what do we have? Effectively an unlimited workforce.

We might have a prompt engineered to act as a personal assistant, translating our requests into actionable steps. We might have another, a generalised worker that iterates through those steps breaking down each piece into something that it understands and calling on custom code to perform specific tasks like web searches or analysing data. I could go on and on but hopefully the power of this is evident. These things compound in the impact that they can make.

This idea in practice then is as simple as writing a program that can chain requests together allowing the API to talk to itself under the guise of various specialisations. Or at least that would make a sufficient version 1 but let's go even deeper!

Once built we can then drastically improve its utility even further by leveraging gamification principles. We might build a user interface that makes it easier to visualise and organise the process as a whole. For example, giving each 'agent' an avatar and animations highlighting where in the process lifecycle it's at as it iterates and bounces back and forth between other agents. We could add speech-to-text support that allows us to give verbal instructions and text-to-speech so that it talks back to us. We could add controller support so that it's as easy to use a system as a video game, so that we can direct our 'agency' to do our bidding as we lay in bed idly 'playing' at being the boss.

Heck we could even engineer the prompts in such a way as to give each agent a personality, or wrap the entire process behind a single 'agent' to replicate working with a single person.

Very exciting stuff.

Admittedly I'm not very far along, but admittedly I don't believe it's as complicated as it sounds to set up. I've already experimented with creating specialised prompts (Ghost writer is a project that does this, providing an interface that allows a user to quickly generate text content on a specific topic in a variety of styles). I've also experimented with talking to a custom AI model with speech-to-text and having it respond with text-to-speech (Voice GPT responds to verbal input in the voice of GladOs from Portal!).

The next step is to build a simplified proof of concept that incorporates a couple of different specialised prompts that can bounce ideas back and forth before returning the results to myself (currently in progress). If this is successful, all the pieces are there and the project as a whole just needs to be put together.

As of 11/12/23 I have an MVP of the basic interface, and the first two agents are set up ready to communicate. I just need to build the mechanism that parses the output from the first before submitting it to the second... But I've been coding all morning so am taking a break ^_^.

Other case studies

API

Web and app development

Web development studio

Web and app development